Making School Online Safety Alerts Work for Student Wellbeing

by

Bec May

As we enter the 2026 school year, online safety alerting and reporting solutions have become a core component of many secondary school technology stacks.

With online learning now embedded in everyday teaching, students across the globe spend a significant portion of the school day connected to digital platforms, devices, and online resources. In the U.S., research shows that 65% of high school students use digital learning platforms, underscoring how much learning is now mediated by online systems.

This expanded access to technology-enabled learning brings clear educational benefits, but it also increases exposure to digital safety risks, including harmful content, unsafe situations, and inappropriate interactions. As a result, schools are expected to identify risks, limit access where appropriate, and take reasonable steps to protect students in the digital space. In this environment, online safety software that reports on harmful content and risky online behaviour has become a foundational part of many schools’ digital wellbeing practice.

However, an alert on its own doesn't keep a student safe.

If you asked your staff what happens after an alert is received at your school, would they know the answer? Would they know who is responsible for responding, when and how a situation should be escalated, and whether it should prompt further investigation, a well-being consultation, parental involvement, or no action at all?

Based on the research, most of your staff may still be in the dark about what actually happens when an alert lands on someone's desk.

Creating an online safety framework to protect young people online

In the RM Online Safety Research Report, fewer than one in four staff working in UK-maintained secondary schools reported being confident in their understanding of the online risks pupils face, and around one in five said they were not at all confident in recognising and responding to online safety incidents. Despite increasingly mature tech stacks and cybersecurity postures, schools are still struggling to bridge the gap between alerts and action.

As part of our Safer Internet Day initiative, we have created a practical guide to help schools close that gap.

The guide examines how online safety software fits into day-to-day school operations, with a particular focus on how alerts and reports connect to the internal processes schools are already using to respond to wellbeing concerns.

Containing clear, actionable guidance on turning online safety alerts into meaningful interventions, the guide is designed to help schools build a shared understanding of their online safety processes, concentrating on what happens after an alert, how decisions are made, and how technology can support consistent, well-being-led responses rather than operating in isolation.

Whether your online safety framework is well established or you are still working through questions of ownership, escalation, and accountability, we hope this guide provides practical value and helps address the realities of the always-on digital classroom.

Making online safety alerts effective in schools

When schools sit down to discuss improving online safety, the default response is often to add more alerts, implement another firewall policy, or scramble to find the latest software solution. This response is understandable in a climate dominated by online safety legislation, social media bans, and ongoing concern about students being exposed to adult content, scams, and online predators. The problem is that while you may feel you are gaining visibility, you are often just inviting more noise.

So while schools may be seeing more digital signals than ever before, that does not mean they are more equipped to interpret or respond to them. Research from the Cyber Safety Project on effective online safety practices shows that teacher confidence and ability to respond to online safety issues improve when schools adopt whole-school, evidence-based approaches and support staff with training and shared processes, something many schools have yet to implement.

The gap between signals and response is where online safety efforts often lose momentum in schools. Alerts continue to arrive from across the network, and staff often feel under-equipped to make judgment calls without a shared frame of reference. Over time, we see this leading to alert fatigue, inconsistent responses to safe and unsafe situations, and growing frustration across the entire faculty as siloed operations within IT teams, wellbeing staff, teachers, and school leadership struggle to align on ownership, escalation, and what an appropriate response actually looks like in practice.

This is why responses vary so widely from school to school, and sometimes even within the same school.

How do I respond when an online safety alert hits my desk?

This is a question we get a lot here at Fastvue, and while there is no one-size-fits-all approach, schools can create shared frameworks that guide decision-making, clarify who reviews alerts, determine how risk is assessed, and what action makes sense in different situations.

A strong response to an online safety alert starts with triage, not panicked, reactive responses. The first step is to understand what the alert is actually showing, how serious it is in context, and who is best placed to respond.

Think of it like this. If a patient presents to a doctor with a cough, the doctor does not refer them to the hospital for treatment of pneumonia. That would be confusing a symptom with a diagnosis. Instead, the doctor asks questions, reviews the patient's history, looks for patterns, and rules out possibilities. Only then do they decide whether it is something minor, something to monitor, or something that needs urgent intervention.

Online safety alerts should be treated the same way.

An alert is a signal, not the whole story. It needs context, professional judgement, and an agreed process for deciding what happens next. When schools skip triage and jump straight to escalation, they create unnecessary anxiety, inconsistent responses, and missed opportunities for early conversations.

Before any action is taken, staff need access to context, not just a headline alert. That means being able to drill down into user activity and understand what actually happened.

At a practical level, this includes visibility into:

The exact search terms or websites involved

Timestamps and duration of activity

Historical patterns rather than a single moment in time

Whether the activity was isolated or part of a broader trend

This level of detail matters. A single keyword hit taken out of context can look alarming. But viewed alongside historical data and timing, it may clearly relate to online learning, classroom research, or age-appropriate exploration. Equally, patterns that emerge over days or weeks are far easier to identify when staff can see activity in sequence rather than as disconnected alerts.

This level of visibility supports better judgment. It allows educators and wellbeing staff to move beyond assumptions and base decisions on evidence, context, and patterns. It also reduces unnecessary escalation by giving staff confidence to differentiate between low-level noise and situations that genuinely require support.

In short, effective triage depends on seeing the full picture before responding.

A helpful first review considers a small number of practical questions (we take you through how this works in our Playbook for Schools):

Context: What was the student doing online at the time? Was the search linked to online learning, research, videos, social media platforms, online games, or age-appropriate classroom activity?

Frequency: Is this a one-off event, or part of a developing pattern across websites?

Potential Impact: Does the behaviour suggest natural curiosity, poor judgment, or an unsafe situation that may affect the student's health, well-being, or safety?

Without this step, all suspicious activity is treated equally by default, even when the underlying risks differ significantly.

Clear ownership matters more than speed

Even the best triage process breaks down without clear ownership. It needs to be clear across the board who is responsible for reviewing alerts, who is accountable for decisions, who should be consulted, and who needs to be informed.

In schools where this is not defined, alerts tend to drift. IT teams may review activity but hesitate to act. Wellbeing staff may be looped in too late or too often. Teachers may be unsure whether an alert is theirs to handle or escalate. Leadership may only become aware once an issue has already grown.

A simple ownership model based on the classic RACI matrix, like the one below, removes this ambiguity.

| Task / Decision Point | Classroom Teacher | Wellbeing / Safeguarding Lead | Head of Year / Stage | Deputy Principal | IT Support | Principal | Parent / Carer |

| Monitor and triage alerts | R | A | C | ||||

| Review mild behaviour incidents (score 3–4) | R | C | I | ||||

| Manage moderate incidents (score 5–6) | C | R | A | R | |||

| Escalate serious/severe alerts (score 7–12) | I | R | C | A | C | I | R |

| Collect and document evidence | R | A | C | C | |||

| Initiate a wellbeing intervention or a digital safety session | I | R | C | A | C | ||

| Contact the parent/carer regarding behaviour | C | R | A | R | |||

| Escalate to police or external authority (if required) | I | C | R | C | A | I | |

| Log incident in School Management System / Compass | R | A | |||||

| Review and update the response policy | C | R | A | C | A | ||

| Review alert coverage and staff access lists | I | A | C | R |

How to read this table: This table uses the RACI model, a widely adopted framework for clarifying roles and responsibilities in risk management and safeguarding processes.

R – Responsible The person who carries out the task. For example, reviewing an alert, speaking with a student, or documenting an incident.

A – Accountable The person ultimately answerable for the decision or outcome. There should be only one Accountable role per task.

C – Consulted People who provide input or context before a decision is made, such as student history, learning context, or insights into wellbeing.

I – Informed People who need to be kept informed about what’s happening but are not directly involved in decision-making or action.

When schools clearly define roles for first review, decision-making, consultation, and communication, responses become calmer and more consistent. Alerts are handled once, by the right people, with fewer handovers and fewer assumptions.

This does not require new roles or more staff. It requires agreement.

Schools that respond well to online safety risks are clear about ownership. They know which educators or teams are responsible for the first review, who is accountable for decisions, and how communication flows to wellbeing teams, teachers, and parents.

When first-response processes are undocumented or unclear, however, the opposite happens. Alerts are escalated defensively, dismissed too quickly, or passed between teams without resolution. None of these outcomes offers the best opportunity for schools to promote online safety, protect children online, or support responsible digital citizenship.

"Isn't that just a whole lot more work for staff who are already stretched?" I hear you ask. The answer is no. Using our playbook, schools can take a short, focused period to establish clear internal frameworks. Once those are in place, the process usually saves time and resources, reducing alert noise, limiting unnecessary escalation, establishing ownership, and helping staff respond consistently and with confidence.

Evaluating unsafe situations: Why scoring matters more than instinct or algorithms

Automated online safety software is excellent at what it's built to do: identify keywords, categories, suspicious searches, and web traffic across the internet at scale.

What these tools cannot do (yet) is understand intent, consent, or learning context. A keyword hit does not tell you whether a student was researching a topic for class, engaging with age-appropriate content, joking with friends, or signalling genuine distress. Algorithms surface signals. They don't interpret meaning.

Ultimately, it is humans who need to bring that interpretation to the table. Teachers, wellbeing staff, and educators understand their students and what's happening in their classrooms (and on the playground) in ways software likely never will.

But when human responses are based on instinct alone, without triage protocols and shared criteria, judgment becomes inconsistent. One person may see an alert as a conversation starter, while another may escalate the alert and contact parents or law enforcement. This inconsistency is confusing for students, unsettling for parents, and leaves schools exposed to complaints, misinterpretation, and preventable reputational risks.

This is where risk scoring can be of real benefit.

A risk-based triage framework introduces consistency without removing human judgement. Alerts are reviewed by staff who consider frequency and impact in context, rather than relying on keywords alone. These evaluations are then mapped to a defined response level.

In practice, this allows schools to:

Separate low-level noise from issues that require support

Reduce false positives that overwhelm wellbeing services

Respond consistently across similar online situations

document decisions for reporting, accountability, and reasonable steps for compliance

By linking alerts to defined response levels, schools avoid treating every signal as a crisis and ensure that genuinely serious risks are addressed promptly and consistently. It means staff know what to do, students receive proportionate responses, parents receive more transparent communication, and decisions are easier to explain and easier to defend. We suggest schools explain elements of the response frameworks in parent-facing communications so everyone is on the same page from the get-go.

Where AI alerting tools complicate online safety decisions

AI-powered alerting tools have significantly increased the volume of online safety alerts schools receive. These tools are designed to scan activity at scale, identify patterns, and surface potential risk more quickly than any one human (or team of humans) could. This capability is valuable, however, in its current iteration, schools are seeing complexity creep in.

AI alerting systems work by analyzing keywords, categories, language patterns, and behavioural signals. They are effective at detecting possibilities. What they cannot determine is the meaning. Alerts generated by AI do not reflect intent, context, or leveraging environment.

Without a clear triage framework, AI alerts can create a false sense of urgency. Staff may feel pressured to escalate simply because an intelligent system generated an alert. The 'AI' tag on these platforms can, unfortunately, lull schools into a false sense of security, but the reality is that AI alerting does not remove the need for human judgment; it increases it.

Schools using these tools effectively understand what AI alerts can and can't do. They treat them as indicators that require review, not conclusions that demand immediate action.

Scoring and triage matter just as much in AI-supported environments, allowing schools to use AI alerting as it was intended: to prioritize attention, not replace decision-making.

Integrating online safety alerts into school safety education

Online safety alerts don't exist in isolation. They are present in schools, classrooms, families, and communities, where young people are learning to navigate the online world. Students move constantly between online learning platforms, social media platforms, online games, websites, messaging tools, and shared digital spaces (I know, it seems exhausting, right?). They are developing judgment, identity, and independence as they learn to use technology responsibly.

This interconnectedness is why alerts alone rarely deliver better outcomes for students navigating the digital world.

When schools rely too heavily on automated detection and reporting without a shared understanding of how alerts should be interpreted, the burden shifts to individual staff. Teachers, educators, wellbeing teams, and IT staff are left to identify risks, analyze data, and decide how to respond, often without a consistent framework to guide them.

Over time, this creates uneven responses to both safe and unsafe situations and growing frustration across the school community.

We see these challenges play out in predictable ways:

Teachers receive alerts but are unsure whether they relate to learning, curiosity, or genuine risk

Well-being teams become overloaded with low-level issues that could have been handled through classroom conversation or safety education

IT teams are pulled into pastoral decisions they are not trained to make

Parents receive mixed messages about whether alerting and reporting software is being used to support young people, promote safety, or support disciplinary action

Students struggle to understand whether online safety systems exist to protect them.

However, when online safety alerts are part of a broader education and wellbeing approach, schools are better placed to respond thoughtfully rather than reactively.

In practice, this changes how schools respond to online safety alerts. It allows them to:

Recognise the difference between age-appropriate learning and unsafe situations

Distinguish curiosity from concerning behaviour

Respond proportionately based on severity, frequency, and impact

Use alerts as a starting point to communicate with students in an age-appropriate way, offering advice and ideas to involve them in decisions about how they interact online

Encourage responsible use of technology instead of avoidance or fear

This is particularly important for secondary school students as they begin to engage with complex topics (and risks) online. Health education, body autonomy, consent, sexual identity, and relationships all show up naturally in searches, videos, and classroom resources. Without context, this activity can appear to be a red flag. With context, it often reflects learning, curiosity, or a student trying to make sense of the world. Handled well, these moments become opportunities for guidance, discussion, and support, rather than punitive action or unnecessary escalation.

Turning online safety alerts into meaningful action in schools

Online safety alerting and reporting tools are becoming an essential part of modern school environments, from Alabama to Australia. They help schools identify potential risks before it's too late. But alerts alone do not create safety.

Schools that respond well to online safety risks are not the ones with the most alerts or the most restrictive controls. They are the ones with clear workflows, shared expectations, and confidence in how decisions are made. They understand that technology surfaces signals, but people, supported by process, are the ones who protect students. The goal is not to react faster; it is to respond more effectively.

As part of our Safer Internet Day initiative, we have created a practical playbook for schools seeking to strengthen their response to online safety alerts in real-world settings.

This guide is designed to support any school using online safety monitoring and alerting tools, regardless of platform.

It helps schools to:

Define clear ownership and escalation pathways

Apply risk-based triage without removing human judgement

Reduce alert fatigue and unnecessary escalation

Align processes across IT, wellbeing, teaching staff, and leadership

Communicate more clearly with parents and carers

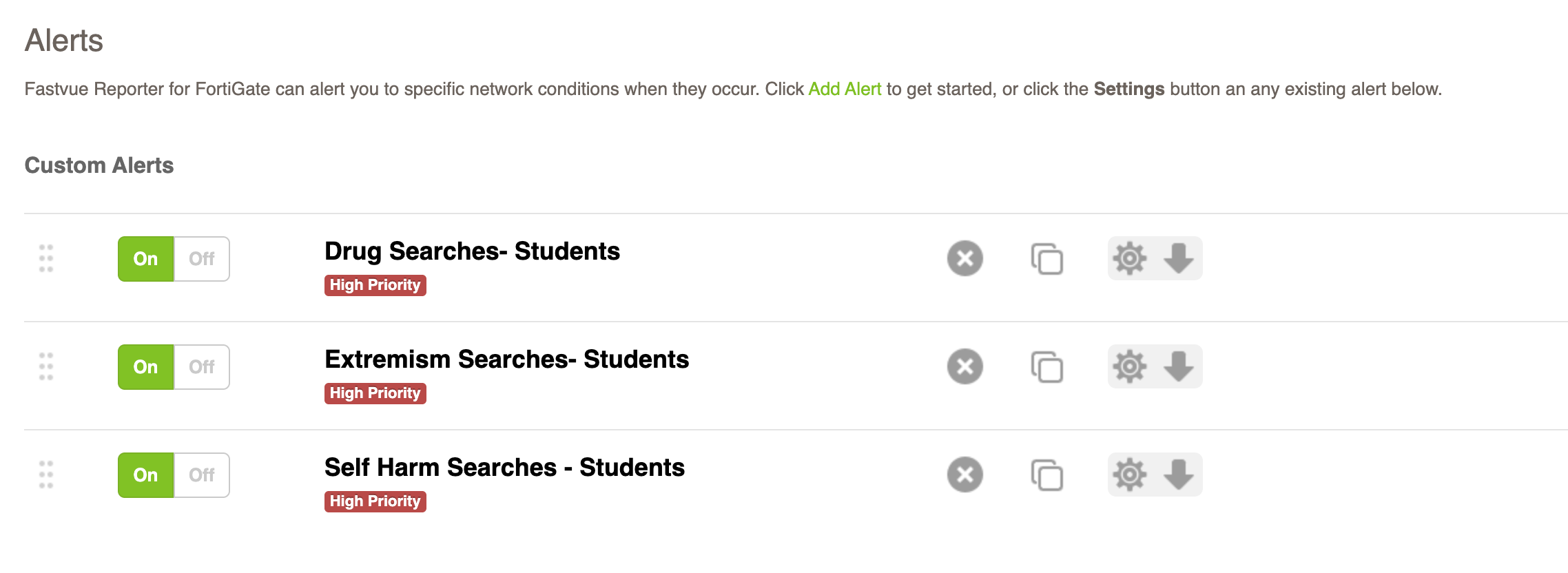

For schools using Fastvue Reporter for Education, we also offer an extended version tailored to your school environment. This includes support with daily alert setup, staff training, and aligning alerts to your existing safeguarding and wellbeing workflows so signals lead to action, not noise.

Or, if you’d like to see how this framework can be applied in your school using Fastvue, reach out to our team to learn more.

Don't take our word for it. Try for yourself.

Download Fastvue Reporter and try it free for 14 days, or schedule a demo and we'll show you how it works.

- Share this storyfacebooktwitterlinkedIn