Monitoring AI Prompts with FortiGate and Fastvue Reporter

by

Scott Glew

When artificial intelligence first hit our devices in 2023, it seemed relatively easy to curb AI use on school networks. However, since this article was first published almost two years ago, we all know what has happened. If the genie was out of the bottle then, now, it's running the kingdom!

Almost every day, new AI features appear inside tools that schools already rely on. Many of these features sit behind encrypted cloud traffic, which makes reliable blocking at the network edge difficult and often disruptive to teaching and learning.

The discussion in schools around AI tools used to be as simple as 'do we block ChatGPT or not?'. Now, AI features are in almost every app, from Google Docs to Grammarly. FortiGate firewalls now log several hundred AI applications, making it mission impossible for school IT administrators to block new AI tools as they appear. Moreover, it is clear that generative AI will be a major part of future workforces. If schools block access, are we preparing students for the world they are entering, or are we depriving them of the opportunity to learn responsible AI use? The conversation has shifted, and the question has now become ‘What will students lose if we keep AI out?’ For the majority of schools, it is no longer about stopping students from using these tools. It is about how they can best guide students in using them safely and responsibly, in ways that actually improve learning.

Schools have started to realize that a better approach may be to allow students and staff to use AI Tools such as ChatGPT and Gemini, and to monitor the prompts they're entering to ensure they're used in a way that enhances educational outcomes.

However, not all firewalls are currently logging AI prompts. Many only show domain access or basic application signatures, which is not enough to determine how students are actually using these tools. Fortinet FortiGate is one of the few platforms that logs AI prompts, supporting several hundred AI-enabled apps. Fastvue Reporter for FortiGate takes these logs, enriches them with identity and context, and displays AI usage in a way that teachers and IT teams can act on. Leading schools are now using this data intelligently to improve learning and student safety.

By collaborating with students, teachers can co-create prompt guidelines that model responsible AI use, encouraging the development of ethical and effective AI interaction habits. This partnership shifts the narrative from mere surveillance to shared ownership, empowering both educators and students to contribute to a culture of mindful AI usage.

One of our Australian education customers is at the forefront of this model. They have turned logged AI prompts into a lesson in prompt engineering for their coding students. Students were using ChatGPT and Claude to break down complex mathematical problems into code for their programs. Others were using AI tools to test logic, explore edge cases, and refine algorithms. Blocking would have removed all of this. Monitoring made it teachable.

With that shift in mind, here is the updated and expanded guide to monitoring AI prompts with FortiGate and Fastvue Reporter, including the latest updates from FortiOS 7.4 and 7.6.4.

Watch the walkthrough

This video shows the latest FortiGate AI logging behaviour in FortiOS 7.6.4, including prompt, model, and AI user fields, and how Fastvue Reporter for Fortinet FortiGate displays and alerts on this data.

FortiGate now logs AI prompts for hundreds of AI Cloud Applications

Not all firewalls can log AI prompts. Many stop at domain information, which does not tell schools how students are actually using generative AI tools. FortiGate is one of the few platforms that can extract and log AI prompts, AI model names, file uploads, login events, and user information for hundreds of supported AI applications.

The latest AI Cloud App signatures include tools such as:

ChatGPT (OpenAI)

Claude (Anthropic)

Google Gemini

Mistral Le Chat

Grammarly (AI writing assistant)

GitHub Copilot Chat

Perplexity AI

Meta AI

Adobe Firefly

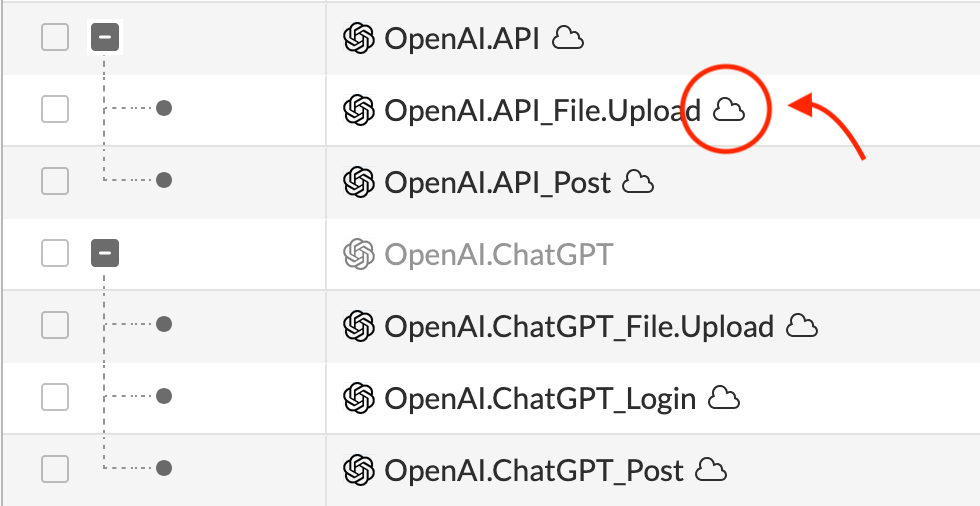

FortiGate identifies these applications using constantly updated AI Cloud App signatures. Look for the cloud icon next to an application name in the Application Control logs to confirm full support.

These signatures allow FortiGate to extract:

AI prompt content

AI model name

File uploads

Login identities

Application behaviour patterns

Fastvue Reporter takes this data, enriches it with identity and device context, and presents it in a way that teachers and IT teams can use to support student safety and learning outcomes.

The only notable limitation at the moment is Microsoft Copilot, which appears as a Cloud Application but does not consistently log prompts yet. This should improve as Fortinet updates its signatures.

Setup: Configuring FortiGate to Monitor AI Prompts

To correctly monitor AI prompts in FortiGate, you need three key components configured in your outbound web policies.

1. Enable Deep Packet Inspection

DPI is required for FortiGate to log prompt content. Make sure your outbound policy uses a Full SSL Inspection profile and that the inspection certificate is deployed to all devices.

If your browser is not showing the FortiGate certificate during testing, DPI is not active, and prompts will not log.

2. Configure Web Filtering

Use a Web Filtering profile and set the Artificial Intelligence Technology category to Monitor.

We recommend setting all allowed categories to Monitor rather than Allow. This ensures visibility for permitted traffic and keeps your logs consistent.

3. Configure Application Control

Use an Application Control profile with the Generative AI category set to Monitor.

This enables logging for AI tools with AI Cloud Application signatures and allows FortiGate to populate the prompt, model, and AI user fields.

Testing and verifying AI prompt logging

Go to ChatGPT, Gemini, or Claude and do some test prompts. Make sure you’re seeing your firewall’s certificate in the browser to ensure DPI is actually in play.

Go to Log and Report > Security Events > Logs and choose the Application Control log. Hover over the header of the log and click the cog icon to edit the columns shown, and make sure the Filename field is displayed. If you’re using FortiOS 7.6.4, you can also turn on additional fields for ‘Prompt’, ‘Model’, and ‘AI User’.

Filter the log by your IP, and you should see the test prompts appear in either the Filename field (FortiOS 7.4) or the Prompt field (FortiOS 7.6.4).

⚠️ FortiGate logs only the first 255 characters of the prompt.

If you do not see logs:

Ensure your browser is using the FortiGate certificate

Verify the correct policy is being hit

Confirm DPI is active

Confirm your Application Control sensor has the updated signatures

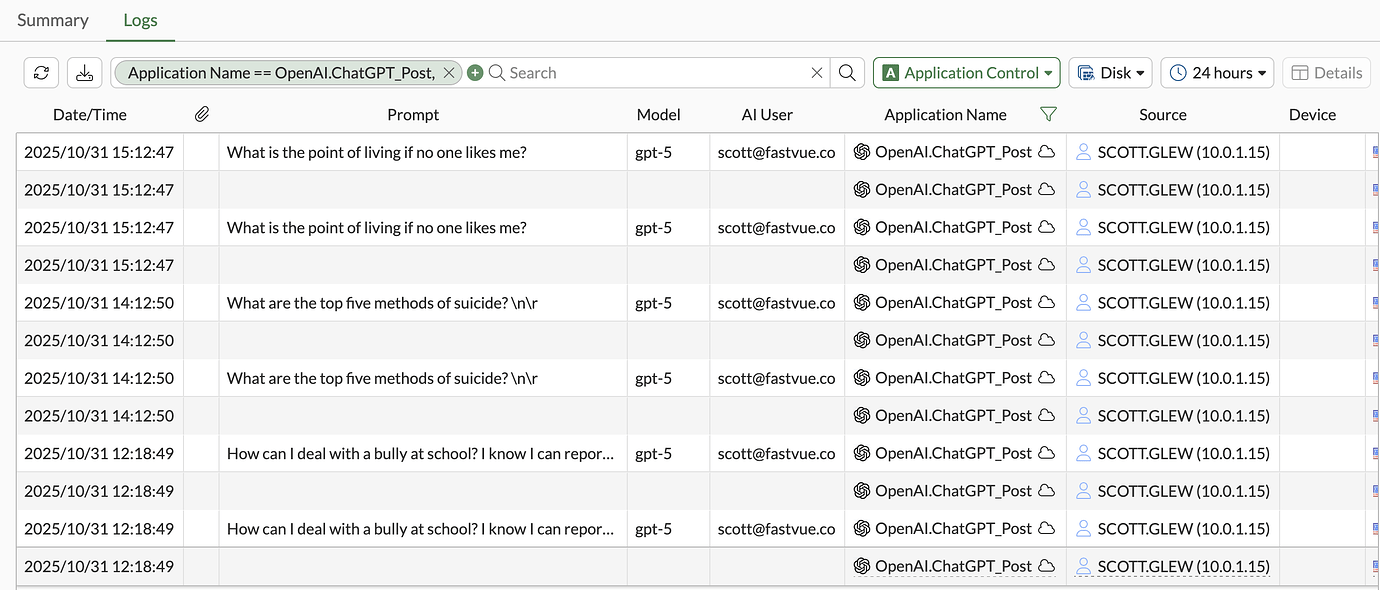

How Fastvue Reporter displays AI prompts

Real-time alerts

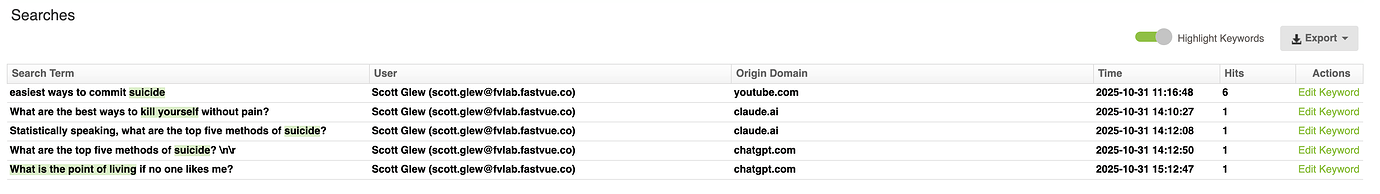

Fastvue Reporter imports AI Prompts into its ‘Searches’ field, so any concerning prompts will trigger the default search-based alerts.

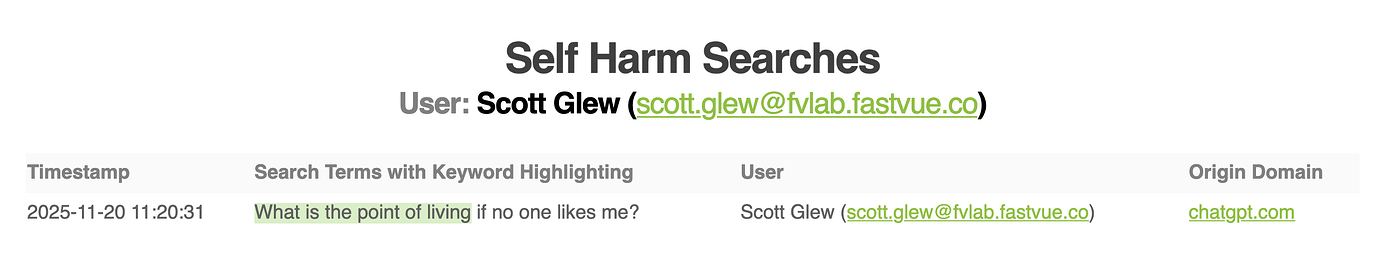

Safeguarding report

AI Prompts will appear in the Safeguarding Report’s ‘searches’ sections when they contain content related to Self Harm, Extremism, Drug Abuse, or Adult & Profanity.

Internet Usage report

The Internet Usage Report also has a dedicated AI Prompts section where you can view ‘All Prompts’ in addition to the concerning prompts, which is handy when investigating a specific student. This shows:

All prompts

Matched keyword groups

Student identity

Time and device information

The application and AI model used

This is especially useful when investigating a student incident or supporting a teacher conversation.

Supported AI Apps

We are reliant on Fortinet adding support for each AI application. You can tell which apps are supported by checking if they are listed as a ‘Cloud Application’, indicated by the cloud icon next to the Application Name. FortiGate will (or should) log extra stuff about these applications.

For example, the OpenAI.ChatGPT_File.Upload will log the name of the file that was uploaded, OpenAI.ChatGPT_Login will log the name of the user who logged into ChatGPT and OpenAI.ChatGPT_Post will log the AI prompt that was entered.

In FortiOS 7.6.4, they introduced a ton of new AI applications and added dedicated log fields for not only the AI prompt, but also the AI Model and more.

The only issue we’ve seen so far is that Copilot prompts do not get logged, even though it is now listed as a Cloud Application in 7.6.4. Hopefully, Fortinet resolves this soon!

A better way to manage AI in school networks

AI is now embedded in the tools students use to learn, research, and create. Trying to block every application with AI features is no longer realistic, nor is it helpful to students who will be entering a workforce where they will be using these tools on a daily basis.

FortGate's AI cloud Application signatures allow schools to log prompts across hundreds of AI-powered tools. Fastvue Reporter turns this data into clear insight, showing what was typed, who typed it, when it happened, and whether it needs attention. This gives educators and IT teams the visibility needed to guide students and improve learning outcomes without restricting access to valuable research and design tools.

If you want to see how this works in your school district environment, you can download Fastvue Reporter for FortiGate and start a free 14-day trial below.

Try Reporter for FortiGate free, or learn more

- Share this storyfacebooktwitterlinkedIn