Staying Ahead of Australia’s Social Media Ban: A School Network Readiness Guide

by

Bec May

As an Australian educator, you are no doubt aware that from December 2025, under-16s will be restricted from accessing major social media platforms under proposed amendments to the Online Safety Act.

The risks around the use of social media platforms are now well-documented; we know that these sites are rife with harmful content such as child SA material, extremist rhetoric, and self-harm encouragement. This harmful content is the reason the ban was devised.

What we don’t yet know is how this ban will actually play out behind the school gates.

While the enforcement of the minimum age obligation lies with social media companies, schools will no doubt still be on the frontline of the fallout. When access fails, workarounds begin. When platforms tighten up, behaviours shift elsewhere. And when those behaviours put students at risk, it’s schools that will be expected to notice, respond, and take action.

There are also growing concerns about censorship and what this means for the future of digital rights and access to information. Locking down platforms without clear, enforceable mechanisms risks pushing young people into darker, less-regulated corners of the internet. It’s not just about what gets blocked — it’s about what students turn to next, and whether schools are equipped to monitor and respond when they do.

Students of today are resourceful, determined, and technologically savvy. They’re digital natives who know how to bypass filters, use VPNs, and spoof tools. Moreover, in recent government trials, leading age assurance technologies could only guess a person’s age within an 18-month range in 85 per cent of cases. Experts are already saying that, with current tools, the ban may not be enforceable in any meaningful way.

Come December, social media use won't vanish; it will evolve. And regardless of how educators feel about the ban on a political level, schools will need to evolve their response in kind.

That means:

Aligning your digital policies with the federal government’s basic online safety expectations

Reviewing how your filtering and monitoring systems detect and report social media activity

Understanding the platforms, tools, and tactics students are using

Supporting wellbeing teams with fast, contextual insight into online behaviour

Working with caregivers and supporting parents beyond the school gate

Ensuring staff can act early, respond appropriately, and document everything clearly

This guide outlines what your school can do now, before enforcement begins, to prepare your network, support your students, and stay one step ahead.

Know your grey areas: YouTube and beyond

When the social media ban legislation was introduced in Parliament in November 2024, the government announced YouTube would be exempt from the age restrictions, initially citing its value in education and health support for young people.

The Government has since done a 180 on this, citing research from the eSafety commissioner that 76% of 10-15-year-olds use the platform, and 37% of kids who encountered harmful content online found it on YouTube. The platform's autoplay, infinite scroll, and algorithmic feed have also been linked to anxiety, depression, and insomnia, and let's be honest, most of us have had the experience of losing a few hours down a YouTube rabbit hole.

As adults, the fallout might be a missed bedtime or a foggy morning. For teens, it can mean falling into damaging echo chambers, triggering content loops, or exposure to harmful narratives when they’re least equipped to deal with it.

The complication? Students will still be able to access YouTube without logging in, meaning the platform falls outside the scope of most age assurance technologies and, therefore, outside the ban.

Further still, once students hit 16, they are legally allowed to access social media services.

So, where does this leave schools?

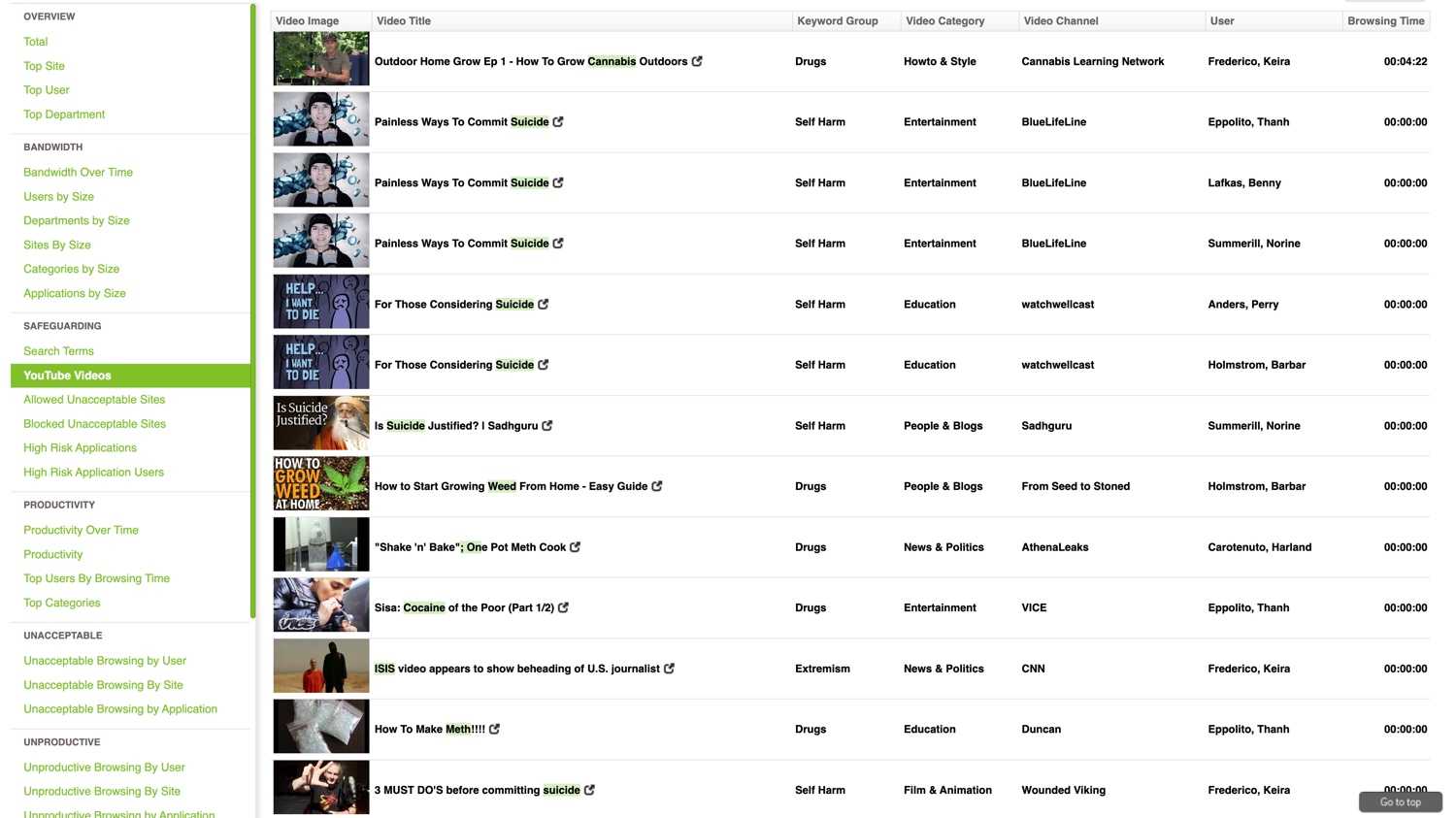

While blocking YouTube altogether isn't practical, especially with its widespread use in teaching, schools can look to monitoring tools like Fastvue Reporter to monitor student access, detect risky patterns, and surface what content students are actually engaging with.

Schools can:

Report YouTube usage at the video title level, with timestamps of exactly what was viewed and for how long

Monitor by year group and content category

Flag high-engagement of high-risk and trending content

YouTube might be a grey area, but it doesn't have to be a blind spot.

Watch for workarounds: VPNS, proxies, and hotspots

When direct access to social media and messaging apps is blocked, whether through a firewall or age verification technologies, you know students will find an alternative way. VPNs, proxy sites, and increasingly AI-powered evasion tools make it easy(ish) to get around restrictions without triggering alerts.

These tools are evolving at a dizzying pace; some leverage AI to rotate IPs, spoof browser fingerprints, or disguise encrypted traffic to look like standard web use. Others simply help students find the newest unblocked proxy or generate scripts to hide their activity.

And yet, most school networks still rely on basic blocklists and surface-level traffic logs, often missing deeper signals of risk.

So, where does this leave schools?

Blocking domains is no longer enough. Schools need to monitor behaviour: who is trying to access what digital platforms, how often, and using which tactics. Tools like Fastvue Reporter allow you to go beyond simple access logs and see the patterns behind them to:

Set alerts for attempts to access known VPN sites or suspicious domains

Track searches for VPNS, proxy tools, and bypass instructions

Monitor underage access to social media sites and applications

Identify ‘unblocked’ sites that are sneaking through content filtering due to mis-categorisation

List all students with VPN applications or extensions installed

Investigate student activity and identity, encrypted or unclassified traffic

Break it down: Reporting on social media use by year level and social media platforms

Not all year groups engage with the online world in the same way. The risks for Year 5 students exploring Roblox are very different from Year 10 students engaging with TikTok trends.

Traditional firewall reports don't reflect these nuances. They're built for IT teams and show raw logs, not who's doing what, when, and how often.

So, where does this leave schools?

Fastvue Reporter gives you the power to break it down. You can generate reports by year level, filter by category=social media, and instantly see which groups are accessing which platforms, when, and how frequently:

Pull usage data for media categories behaviours across specific year levels

Compare trends between groups to spot shifts in student behaviour

Identify high‑engagement platforms and time‑of‑day patterns

Share focused insights with pastoral teams, year leads, or curriculum heads

Use historical data to track the impact of digital policies or parent outreach

This kind of reporting gives school leaders evidence, not assumptions, and supports safeguarding and operational decisions without drowning your team in logs.

Equip wellbeing and pastoral care teams with context, not noise

Pastoral care and wellbeing teams are increasingly expected to make sense of their students' digital worlds, often with little more insight than a blocked keyword, an alert that's a red herring, or a gut feeling that something's not right. However, it's almost impossible to address these issues properly without any granular evidence with which to start the conversation.

The problem? Most tools generate more noise than clarity; they may alert when an advert is blocked, but they won't uncover the actual website the user was engaging with. They can highlight a keyword, but don't take into account acceptable uses of that word (e.g. sex chromosomes, or shooting star), or how long the user spent engaging with the associated content..

So, where does this leave schools?

Firewalls can only tell you so much about eSafety. Your wellbeing team doesn't need firewall logs; they need context. Fastvue Reporter gives them just that, a clear picture of how students are behaving online, across time, allowing pastoral care teams to:

Detect patterns linked to self-harm, grooming, and other online risks using keyword groups

View video titles, search terms, and access frequency, not just 'site visited'

Filter by year level, time of day, or risk type to support early intervention

Avoid alert fatigue with tailored real-time notifications

Empower families beyond the school gates

Schools are under increasing pressure to manage what young people do online, even when it's happening on personal devices, after hours, and off school grounds. But the truth is, you can't be everywhere, and you shouldn't have to be.

Teachers and wellbeing staff are already stretched. They're not network analysts or 24/7 moderators. Yet when online issues escalate, whether it's social media conflicts or exposure to harmful content, the fallout almost always lands at the school's feet.

So, where does this leave schools?

It's time to shift the weight. Schools can play a leading role in helping Australian caregivers to navigate the ban on social media accounts — one of the toughest parenting challenges of the 21st century.

By helping parents and carers understand the risks of the digital world, set age-appropriate boundaries, and take ownership of what happens at home and on personal devices. By sharing meaningful, digestible insights, schools can collaborate with parents to protect children online, rather than bearing the brunt of the responsibility.

Provide regular updates on what's happening on your network, from VPN use to platform shifts, and key trends in social media use, online gaming, and content searches.

Include guidance on age restrictions, filtering tools, and app store settings.

Share clear expectations about what is monitored at the school and how concerns are dealt with; We have a range of policy templates and communication guides that can help to support you in this process. Simply reach out, and we'll send them your way.

Reinforce that supporting parents is part of the school's strategy for protecting children online, but it's not about extending school oversight.

Quick-glance checklist: Staying ahead of Australia’s social media ban

| Area | Action |

|---|---|

| Digital Policy Alignment | Review and align policies with the federal government’s basic online safety expectations and the updated Online Safety Act |

| Filtering & Monitoring | Ensure your firewall and reporting tools can detect and report social media use, including attempts to gain access to banned or grey-zone social media platforms. Set up your firewall DPI so you gain access to video-title level reporting. |

| YouTube Use | Track video title-level activity; block comments if necessary; monitor viewing by age group |

| Workaround Tactics | Equip staff with reports that show search patterns, flagged keywords, and online risks tied to student behaviour — not just blocked sites |

| Family Communication | Send clear, consistent guidance to parents and carers on age restrictions, app store controls, and how your school monitors children online |

| Age Assurance Awareness | Stay updated on the limits of age verification technologies and communicate clearly with staff and parents about realistic boundaries |

| Audit-Ready Reporting | Use Fastvue to generate clean timelines of activity, and collect evidence for incident responses and support child protection policies |

Final thoughts: Stay one step ahead

The incoming social media ban is a significant shift, but the consensus is it won't be a blanket solution.

Young Australians will continue to find ways to interact with digital technologies, despite the ban, with or without accounts. As schools, it isn't your job to stop access to everything. Schools do, however, have a social responsibility to minimise harm and understand how students are interacting with online content.

Only then can they help students to become responsible digital citizens, equipping them with the critical thinking needed to navigate an online environment that plays a growing role in their education, relationships, and future careers.

Fastvue Reporter helps schools stay informed, but not overwhelmed, giving you clear insights into what's happening on your network.

Don't take our word for it. Try for yourself.

Download Fastvue Reporter and try it free for 14 days, or schedule a demo and we'll show you how it works.

- Share this storyfacebooktwitterlinkedIn