Online Safety in Schools: Lessons from the Charlie Kirk Shooting

by

Bec May

You’ve likely already seen the headlines: Charlie Kirk, a prominent MAGA-aligned political commentator, was shot and killed last month during his American Comeback Tour event at Utah Valley University. Within minutes, eyewitness footage and graphic videos of the shooting were circulating on social media, spreading rapidly among teens eager to see for themselves what had happened.

The incident underscored much about the modern information ecosystem: how violent events are quickly politicized, sensationalized, and amplified online. It also showed that in an always-on, social media-saturated environment, the young people in our society and schools are now exposed to real-life violence in real time.

What was once a headline on the evening news now instantly appears on Facebook, TikTok, and YouTube, spreading like wildfire in group chats and search histories.

For schools and school districts, this raises a pressing safeguarding concern: when students are exposed to, or are actively searching for, violent political content, how can school administrators and school personnel separate normal curiosity and political interest from a warning sign that a student may be struggling, distressed, or at risk of radicalization?

Why schools need granular data when students search for graphic content

The Charlie Kirk shooting went viral almost instantly. Graphic video clips, eyewitness accounts, and political commentary were disseminated across social media feeds, and within hours, those same terms began appearing in student search logs on school-issued devices.

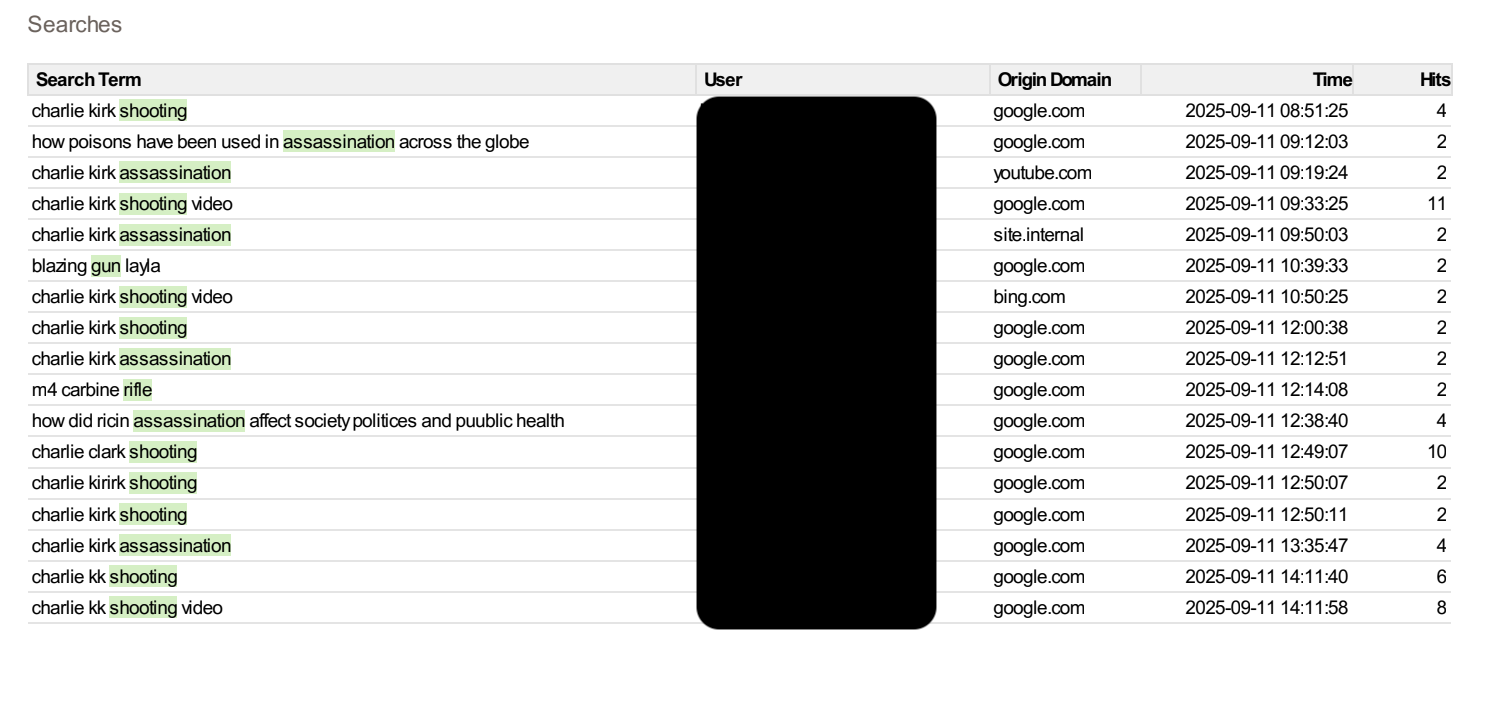

One school district using Fastvue Reporter saw this play out in real-time. Safeguarding reports flagged spikes in queries related to the fatal shooting, including 'Charlie Kirk assassination' and 'Charlie Kirk shooting video'.

While other school internet monitoring and reporting software may also surface these searches across a school network, it can often be difficult to discern the intent behind them. Was the student expressing political interest, curiosity, or a darker leaning towards extremist rhetoric? By the same token, many internet monitoring and reporting solutions cannot show how long a student engaged with a piece of content, which section of the video was viewed, or which user account carried out the search.

Fastvue Reporter for Education closes these gaps. By integrating directly with the school’s user directory, our streamlined solution ties searches and video access back to specific student accounts rather than just IP addresses. This is combined with the context that matters for safeguarding, including:

The search term, video title, and thumbnail

The date and time the video was watched

The watch time helps to identify whether the student saw the graphic content or left the site after a few seconds

Wellbeing leads can then hone down further if required, with self-serve user activity reports designed to reveal if this was a one-time search or part of an escalating pattern of concerning internet activity.

For school district safeguarding staff, this changes the picture completely. Instead of vague logs that show a device accessed YouTube wellbeing staff get granular insight showing, ofr example, that Student X searched for “Charlie Kirk shooting video,” watched three minutes of footage, and then returned to the topic repeatedly.

When paired with offline wellbeing insights, Fastvue reports can help distinguish one-off political interest from online exposure to graphic content that may suggest distress, trauma, or early signs of radicalization. With accurate, user-level evidence, school personnel can then make informed decisions about whether to discreetly check in, offer mental health support and resources, or escalate according to their internal safeguarding frameworks.

Custom keyword groups for monitoring harmful content in schools

Most student internet monitoring tools, including Fastvue Reporter, ship with standard keyword libraries for categories such as self-harm, extremism, drugs, and adult content. These are regularly updated; however, no static library can anticipate every crisis, news event, or viral video.

When something like the Charlie Kirk shooting occurs, students will be searching for names, hashtags, and phrases that are not necessarily in the default lists.

This is where custom keyword group become invaluable, giving school IT teams the control to alert and report on specific online safety risks:

Names of individuals involved (e.g., Charlie Kirk, Tyler Robinson).

Venues or institutions (e.g., Utah Valley University, or a nearby campus such as Dixie Technical College).

Phrases and hashtags spreading online (e.g., “full video,” “uncensored footage”).

Variations of search terms suggested by Google autocomplete.

Schools can then set up real-time alerts for these custom keyword groups, ensuring the right personnel are notified at the right time.

Building a digital safety framework with Fastvue Reporter

Custom keyword groups are great (if we do say so ourselves), but they are only one part of a larger online safety puzzle. The bigger challenge for any school district is knowing what to do once a search is flagged and an alert lands in your inbox. It's not enough to know that a student typed “Charlie Kirk shooting video” into Google on the school network. What really matters is what happens next: how that information flows into your school's student wellbeing framework and whether it leads to timely support for the student.

While most schools have rigorous protocols for addressing student well-being in the classroom and on the playground, many still struggle with how to address online safety concerns.

This is where a digital safeguarding framework can step in, complementing your existing child protection response guidelines.

Each school will obviously have its own requirements, shaped by its unique demographics, community, pastoral context, safeguarding policies, and risk assessments.

However, regardless of these factors, a strong framework should include:

Scheduled daily safeguarding reports summarising risky online activity, such as risky searches, attempts to access blocked unacceptable sites, and flagged video content across the school network.

Instant alerts for high-severity categories, such as self-harm or extremist material, are routed directly to the relevant wellbeing or child protection lead.

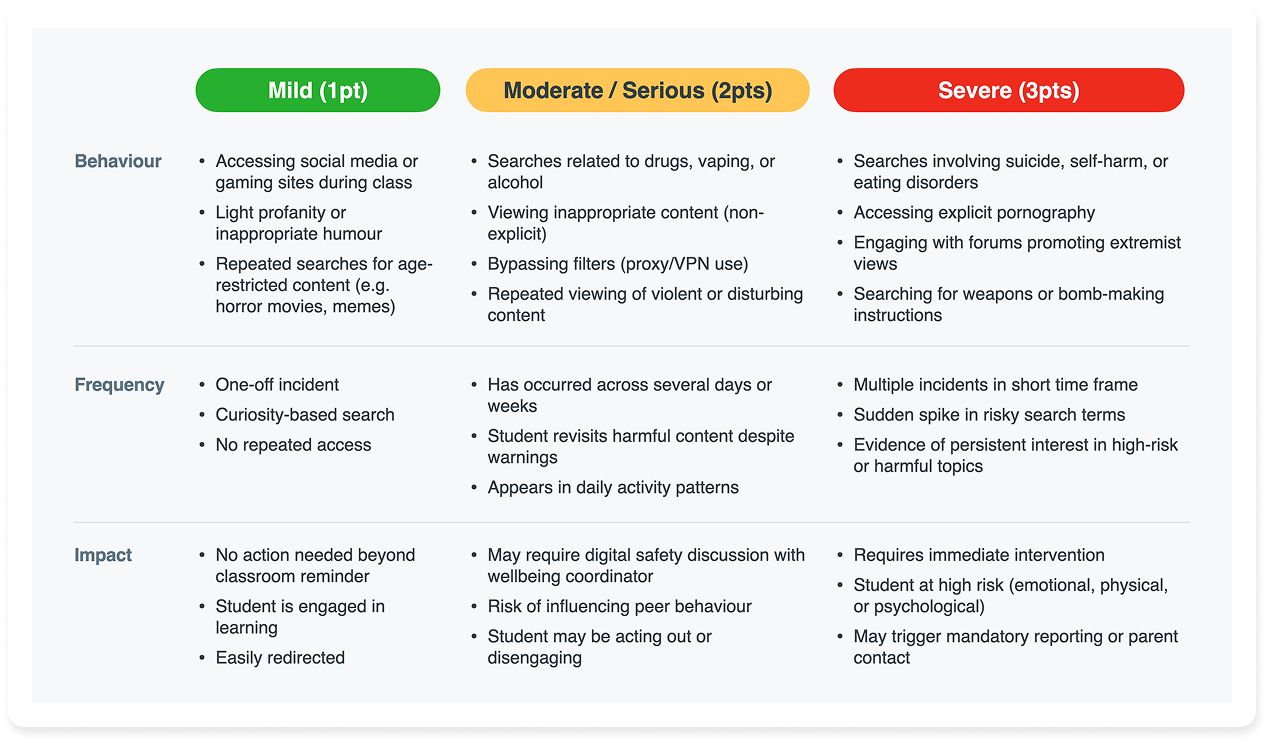

A Behavioural Risk Escalation Matrix (BREM) that grades incidents by severity (mild, moderate, serious, or severe) and provides clear guidance on how to respond. The matrix helps schools manage low-level concerns internally, while ensuring serious or repeated behaviour is escalated through the right channels, supported by leadership or external authorities when necessary.

A RACI-style accountability structure to clarify who receives alerts, who investigates, who acts, and who informs parents.

School Safety tip: Avoid siloing alerts to a single staff member. Shared visibility across IT, pastoral, and leadership roles ensures that critical concerns do not fall through the cracks.

Clear escalation paths (using models like Fastvue’s CLEAR framework) so that safeguarding teams know exactly who reviews, who acts, and who informs parents.

Parent communication protocols that explain why online safety monitoring is in place and reassure families that data is used only to protect children, not punish them.

The result? School administrators, IT teams, and pastoral staff all working from the same playbook, using the same student data, and responding consistently when harmful content or online abuse surfaces.

Fastvue's five-step safeguarding workflow

Modern digital safeguarding in schools must go beyond blocking sites or filtering searches. It's increasingly about building processes that help staff detect, assess, and respond to online harms with consistency and care. Whether you're dealing with viral video content containing violent footage or a student showing signs of distress via concerning searches, schools need monitoring systems that turn online activity into meaningful insights in order to keep children safe online.

Fastvue's Five-Step Safeguarding Workflow gives schools a practical framework for managing online safety issues, ensuring visibility, privacy, and accountability at every stage.

1. Detection

No school can protect children online without comprehensive visibility into its network activity. Every search, site visit, or attempt to access restricted material forms part of a student's digital footprint and can reveal early signs of distress or risk.

The detection phase is where monitoring systems do their best work. Fastvue Reporter analyses your school's firewall logs to detect online harms such as self-harm, extremism, drug content, and adult material through built-in keyword libraries and unacceptable web categories.

Best practice setup:

Schedule the Daily Safeguarding Report for all students to summarise high-risk online behaviour.

Configure instant alerts for self-harm searches and critical terms so the designated person is notified immediately.

Create additional reports and alerts for specific cohorts (e.g., boarders, year groups) to direct incidents to the correct staff.

Goal: ensure early visibility of risk before behaviour escalates.

2. Triage

Triage is the sorting stage, where the DSL or wellbeing lead reviews new alerts and determines their priority using the Behavioural Risk Escalation Matrix (BREM) (See images below). This process converts raw alerts into structured risk assessments.

The DSL reviews:

What was searched or viewed

When it occurred and for how long

Whether it appears isolated or repeated

Whether it relates to a current safeguarding concern or news event

They then score the incident against the BREM categories:

Behaviour: nature of the content (e.g., violent, explicit, or self-harm related)

Frequency: one-off or repeated pattern

Impact: potential harm or distress level

Response levels:

Mild: record and monitor

Moderate: notify pastoral or wellbeing staff

Serious/Severe: escalate to leadership or child protection

If time-critical: Skip ahead to Action, contact parents or emergency services, and return later for documentation.

Triage ensures the right people respond to the right issues quickly, keeping digital safeguarding efficient and evidence-based.

3. Investigation

Once triage confirms an incident is a genuine concern and needs further attention, the appropriate staff member can begin to investigate. This involves gathering full context from Fastvue and school staff before any intervention.

Key investigation steps may include:

Running a User Activity Report to see the user’s search history over a particular time period.

Comparing recent Safeguarding Reports to examine repeated patterns or emerging trends.

Consulting with pastoral staff or teachers to add behavioural or classroom context.

Goal: Validate the BREM rating and determine whether intervention, monitoring, or escalation is appropriate.

4. Action

The Action stage ensures that safeguarding decisions are made in a timely and well-documented manner. Schools should align this stage with their RACI matrix to avoid gaps in accountability.

Typical actions include:

Mild incidents: Recording the event in the school's online safety system, and continuing observation

Moderate incidents: Schools may elect to hold a wellbeing conversation, notify parents, and provide ongoing digital safety education

Severe incidents: May be escalated immediately to leadership or external services such as mental health professionals

Documentation and communication:

Log incidents in your school management system

Follow your Parent Communication Policy to ensure conversations are supportive, not punitive

Use Fastvue’s summarised reports instead of forwarding raw data to maintain privacy and reduce misunderstanding

5. Review

The final step — Review — ensures that your online safety framework continually evolves in response to student behavior and online trends.

Recommended review practices:

Revisit reports weekly to identify repeat themes or new online harms

Update keyword groups and alert thresholds to reduce false positives

Review escalation paths and staff coverage to prevent missed alerts

Conduct regular training refreshers using anonymised examples

Goal: build a proactive, data-driven culture of online safety that evolves with the digital landscape.

Online safety requires more than just monitoring

As schools navigate the next phase of the digital revolution, protecting students online has evolved beyond simply monitoring searches or blocking access to harmful sites. Schools need to understand why a student searched for something, what they actually engaged with, and whether it's part of a broader pattern of risk. These granular details provide the necessary context to respond effectively via a clear path of action.

Fastvue Reporter provides more than just a software solution. We partner with schools to inform and enhance their existing online safety frameworks, from technical setup to staff training and policy support. Whether it's configuring daily reports, building custom keyword groups, or establishing escalation protocols, we're with you every step of the way.

In the UK, Fastvue aligns with the Keeping Children Safe in Education (KCSIE) requirements and the UK Safer Internet Centre’s expectations for appropriate educational monitoring. Globally, schools use Fastvue to lead in online safety, not lag behind.

If your school is ready to move beyond reactive filtering and towards a proactive, privacy-first safeguarding strategy, we’re ready to help.

Don't take our word for it. Try for yourself.

Download Fastvue Reporter and try it free for 14 days, or schedule a demo and we'll show you how it works.

- Share this storyfacebooktwitterlinkedIn