Improving Digital Safety in K-12 Schools with Smart Digital Safeguarding

by

Bec May

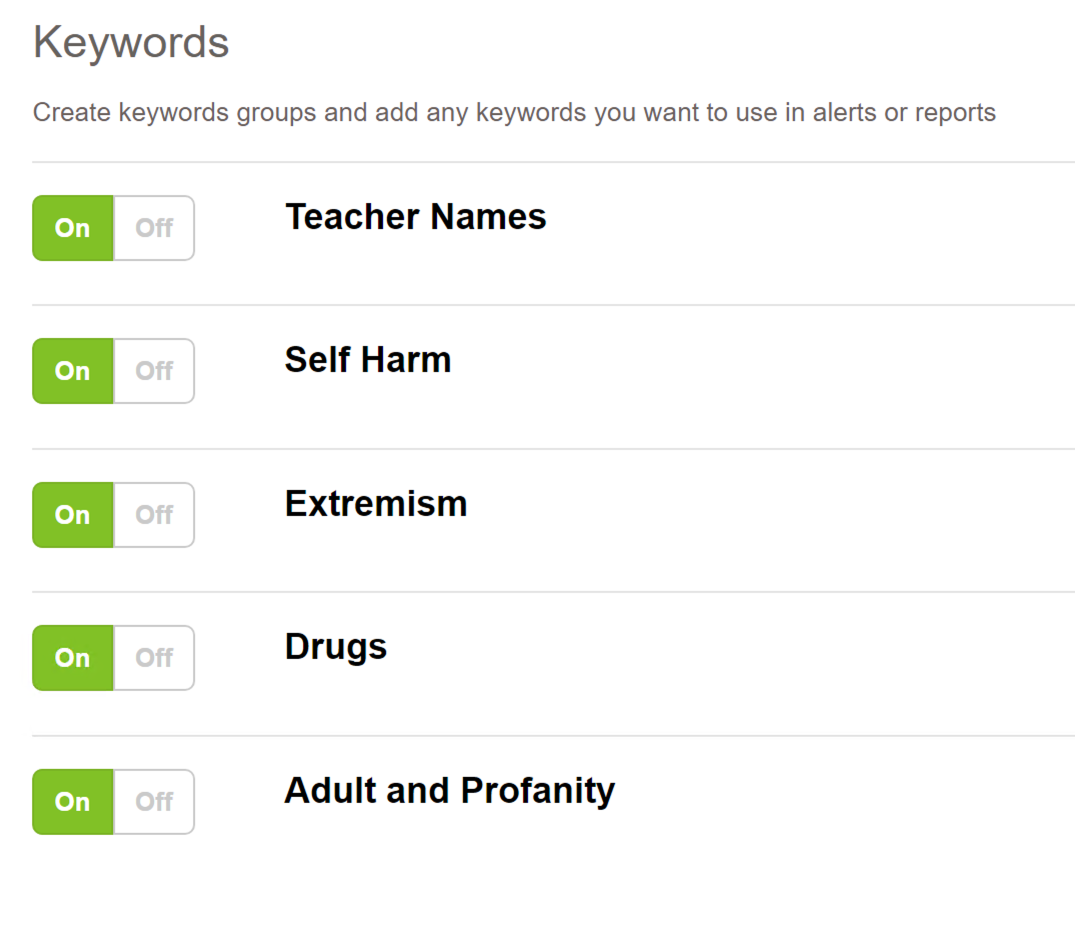

Digital safeguarding in K-12 schools isn't just about blocking 'bad' websites. It's about understanding the risks students face, identifying the digital fingerprints of harm, and providing schools with the tools to act before it's too late. From self-harm indicators to grooming, to extremist and violent intent, the warning signs are often visible in network activity long before they spill into the real world.

The part that many school leaders don't realize? This data already exists in their infrastructure. Every firewall on campus is quietly logging these warning signs from violent searches to visits to high-risk applications. The problem is that it's buried in raw logs that no safeguarding lead or well-being officer can realistically interpret.

That’s where digital safeguarding solutions like Fastvue Reporter for Education step in. By transforming firewall data into clear, real-time insights, they enable the early detection of risks, allow for appropriate intervention, and support students before issues escalate.

Let’s take a closer look at the major safety risks facing US K-12 schools, and how the right digital safeguarding approach can help.

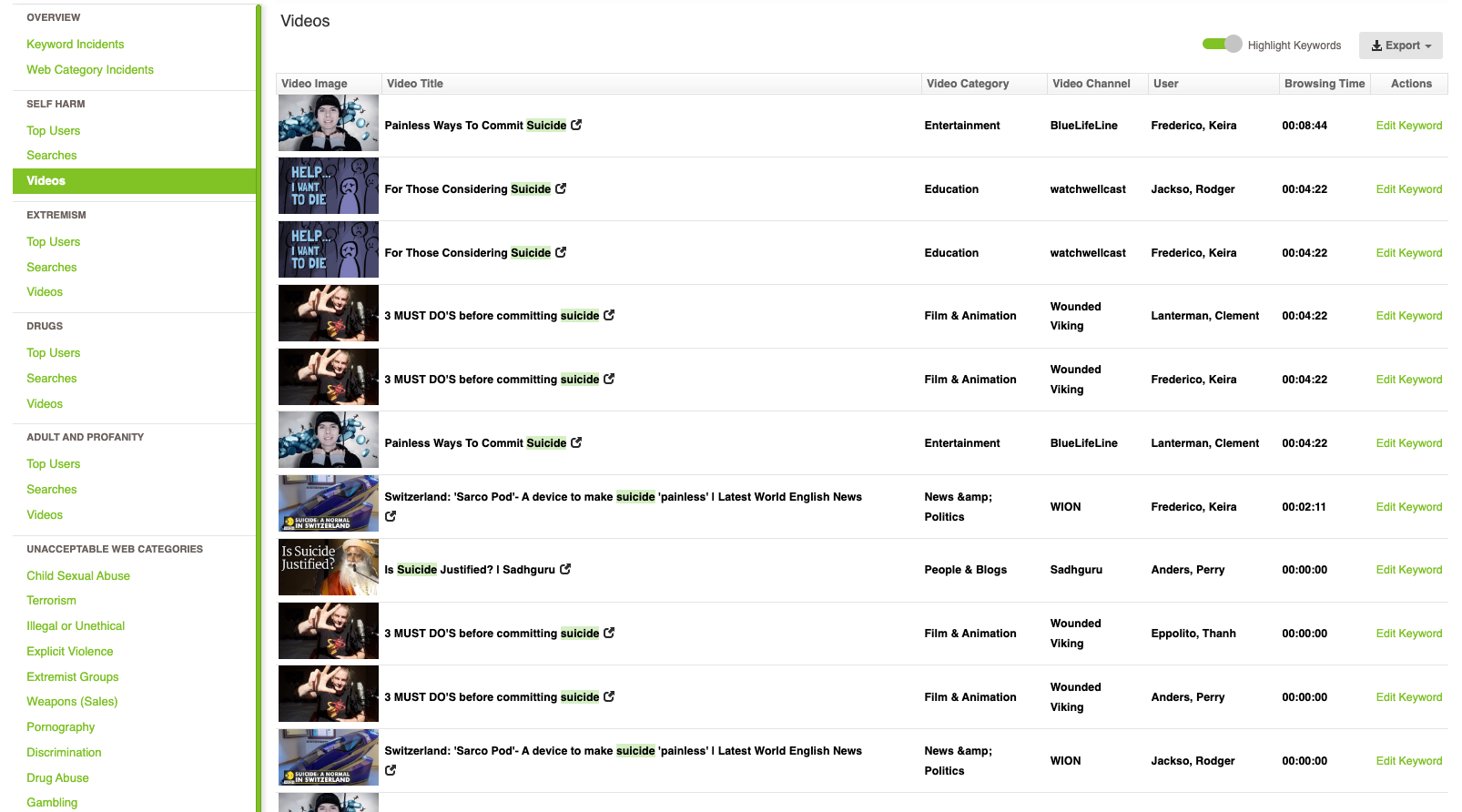

Detecting self-harm and suicide risk in US school students

Self-harm and suicide risk are now recognized by the CDC, SAMHSA, and state education departments as top safeguarding priorities for schools. The CDC’s Youth Risk Behavior Survey found that 1 in 5 high school students (20.4%) seriously considered attempting suicide, and nearly 1 in 10 (9.5%) reported a suicide attempt. According to the data, rates are higher among females and LGBTQ+ students. These groups often face additional barriers to support, including stigma, fear of disclosure, or lack of culturally responsive mental health services.

Unfortunately, traditional ‘block everything’ filtering can actually exacerbate the situation for these groups. Reviews of school filtering practices show that many LGBTQIA+ mental health and support websites, including crisis lines, peer communities, and therapy resources, are often wrongly categorized as adult or mature content. Blocking these valuable lifelines denies vulnerable students access to help, even as their digital activity shows signs of crisis.

This is why proportionate digital safeguarding is essential. Instead of indiscriminately blocking, Fastvue Reporter for Education highlights patterns of concerning searches and access attempts while allowing schools to maintain access to legitimate support resources. The focus shifts from locking down the internet and hoping for the best to obtaining accurate, actionable insights that provide schools with the evidence they need to respond effectively.

Suicide and self-harm risks often escalate quickly and in silence. Research shows that less than half of people with suicidal thoughts or behaviors tell anyone, which means many students never voice their struggles to parents, teachers, or peers. For teenagers, the internet has increasingly become less of an encyclopedia and more of a confidant, the first place they turn to express distress, search for methods, or seek communities that may normalize harmful behavior.

Repeated searches for self-harm methods, visits to forums that normalize harm, or sudden changes in browsing patterns are often early warning signs, but without the right visibility, they remain hidden inside firewall logs.

Fastvue Reporter provides real-time alerts and easy-to-read reports, enabling schools to act before risks escalate. By focusing on the signals that matter, it reduces false positives and ensures that safeguarding staff see only what is relevant.

What Fastvue highlights at the network level:

Search intent patterns: repeated queries around suicide methods, self-harm forums, or phrases like “how to hide cuts.”

High-risk category access attempts: persistent visits or attempts to reach sites classified as self-harm or suicide content.

Escalation trends: frequency and severity of flagged searches rising over time, helping distinguish one-off curiosity from emerging risk.

Group-level visibility: patterns across classes, grades, or groups, showing whether the issue is isolated or widespread.

Identifying the risk factors of school violence and school shootings

High-profile tragedies like the Columbine High School shooting, which the FBI heavily researched, showed that violence in educational institutions doesn't erupt from nowhere. Students responsible for these incidents usually leave a trail of digital warning signs in their online activities: repeated violent searches, engagement with harmful content, or discussions on social media platforms and gaming chats.

School districts that rely solely on physical security measures, such as metal detectors, camera systems, and on-campus security staff, may miss these early digital red flags. By monitoring school network traffic and applying risk assessments to identify suspicious patterns, schools can intervene before online hostility escalates into offline tragedy. A proactive approach to student safety, combining both physical and digital safeguards, offers the most effective chance of identifying and addressing these behaviours before they escalate into violence.

Why digital warning signs matter: What FBI research reveals about school shootings

The FBI's research shows that school shootings are rarely impulsive. Analysis shows:

93% of attackers meticulously plan their actions in advance, rather than acting in the heat of the moment

95% of attacks were carried out by current students at the school

75% of perpetrators had experienced bullying or social rejection beforehand

Many of the shooters leaked their intentions beforehand, either online or in person, through threats, social media posts, and behavioural changes

They recommend establishing threat assessment teams (educators, mental health professionals, law enforcement) and investing in anonymous reporting systems, which have proven to reduce violence in schools.

| Incident | Year | Online Warning Signs | What Was Missed |

|---|---|---|---|

| Columbine (Colorado) | 1999 | Personal blog with bomb-making instructions and threats; hateful posts | Teachers and law enforcement dismissed early complaints about the blog |

| Red Lake (Minnesota) | 2005 | Violent Flash animations, neo-Nazi forum posts, and the glorification of Columbine | No monitoring of extremist online activity tied to student identity |

| Uvalde (Texas) | 2022 | Social media posts with firearms, violent threats, and troubling images | Disturbing posts were seen by peers but not flagged by systems |

| Covenant School (Nashville) | 2023 | Online manifesto, searches on past shootings, suicidal messages | Clear signs of distress were not escalated to intervention teams |

| Antioch (Tennessee) | 2025 | Extremist forum activity, racist ideology posts, school floorplan shared online, livestreamed attack | No early disruption of online extremist engagement or cross-platform chatter |

How Fastvue Reporter can help surface digital warning signs

These cases demonstrate that school violence doesn't usually come from nowhere; more often than not, these incidents are preceded by digital breadcrumbs. With the right digital safeguarding setup, schools can detect:

Repeated violent or weapon-related searches

Access to extremist or harmful content

Visits to questionable forums and sites known to host extremist rhetoric

Fastvue converts raw firewall data into real-time alerts and user-centric reports, giving IT and safeguarding teams the tools to intervene before it’s too late.

Inappropriate staff–student relationships in the digital age

Many schools may still want to turn a blind eye to this issue, but one only needs to turn on the nightly news to see another case of a teacher being accused of inappropriate conduct with a student under their care.

Unfortunately, inappropriate staff-student relationships aren't new. They've been documented for decades, with many teachers facing criminal charges in the wake of these allegations. But even as awareness and training have increased, the problem hasn't diminished. Recent investigations suggest it's reaching a crisis point. A 2014 report by the US Government Accountability Office (GAO) entitled Federal Agencies Can Better Support State Efforts to Prevent and Respond to Sexual Abuse by School Personnel” found that sexual grooming of students by public school employees remains a persistent and growing problem. The report highlighted that many cases involve the use of school resources and online services—including email, devices, and internet access—as the first points of contact and that these can 'be the key to identifying and preventing the criminal act of sexual abuse.'

A 2025 New York Post investigation revealed 121 substantiated cases of modern technology being used by New York City educators to commit child sexual exploitation between 2018 and 2024. These teachers used personal phones, Snapchat, and other social media platforms to request nude photos and offer money, gifts, and drugs to students.

One of these educators exchanged over 700 inappropriate messages with a 15-year-old student and behaved inappropriately in his empty classroom. Yet another teacher exchanged an astonishing 9000 tests with a male student before witnesses raised the alarm after spotting the two hugging alone in a classroom.

There is a major concern that the growing use of technology and social media as a convenient way for adults and students to interact is blurring the lines between what is and isn't appropriate between school leaders and students. So much so that between 2019 and 2023, New York City's Special Commissioner of Investigation (SCI) issued 54 separate recommendations using the Department of Education (DOE) to ban staff from contacting students via personal cell phones, social media, or other apps. Yet, the DOE declined to enforce the ban.

Matters are further complicated when married school staff attempt to keep these interactions away from their spouses by using the school network to conduct these illegal online activities. Christina Formella, a married special education teacher, was recently accused of sexually abusing a 15-year-old student at least 45 times. Much of the early contact took place during the school day on school networks and devices, before eventually escalating off-platform and into the real world. Shockingly, there are no laws in place to prevent this type of contact from occurring on school devices.

When policy lags in real life, school districts need a practical plan for safety in K-12 schools that encompasses both online safety and offline safeguarding, ensuring that student safety and well-being are prioritized across educational institutions.

Non-digital warning signs school leaders should look for

Boundary testing, for example, scheduling one-on-one time without an apparent educational reason, giving gifts, showing special treatment, and secrecy about meetings.

Emotional dependence, such as a student describing a 'special' relationship, or showing visible signs of distress when approached by the adult in question.

Changes in student behaviour, such as sudden absences, academic decline, or social withdrawal from their peers

Digital grooming signals that your network can surface

Fastvue transforms your school's raw firewall logs into user-level insights that can flag when a student may be at risk, showing you:

Repeated high-risk searches by students. For example, 'Is it okay for a teacher to hug a student?' or 'What can teachers do with students? '

Staff access patterns that do not fit with their current role, for example, repeated visits to social media websites during teaching hours, or out-of-hours logins to webmail or image hosts

Searches by students for a teacher’s name, number, or other identifying details online

Even as awareness of digital grooming risks grows, schools are still caught between policy gaps and visibility gaps. Policies may prohibit inappropriate staff–student contact, but without the ability to see how school networks and devices are being used, those rules can’t be enforced until it’s too late. This is where Fastvue comes in. By turning firewall logs into clear, actionable reports, Fastvue helps schools bridge the gap between what policies say and what actually happens online, surfacing early signs of digital grooming so leaders can act before behaviour escalates offline.

Cybersecurity and digital citizenship in K-12 schools

Digital safety in K-12 schools is not simply about monitoring for harmful behaviour or blocking risky internet sites. It's also about teaching students the skills needed to navigate their online environment responsibly and securely. With the rise of cyber safety concerns, such as phishing scams, identity theft, and the misuse of children's personal information, educational institutions must ensure that students learn how to protect themselves. Compliance frameworks, such as the Children's Internet Protection Act (CIPA) and the Children's Online Privacy Protection Act (COPPA), reinforce this responsibility by requiring schools and technology providers to implement safeguards that limit exposure to inappropriate content and protect students' data and online privacy.

Building digital citizenship in US school districts

Students need more than just blanket rules and blanket blocks. They need structured lessons in digital citizenship that empower them to take control of their own digital experiences. Lesson plans should include:

How to spot phishing emails and shady online services

How to create strong passwords for defending against data breaches

When and how to safeguard sensitive information, including personal identifiers

How to use privacy controls responsibly, balancing safety with data privacy

For schools looking to build or strengthen these programs, the following sites offer some invaluable resources:

The U.S. Department of Education's Keeping Students Safe Online site offers practical resources on responsible online behavior, safe technology use, and strategies for digital resilience.

The Department of Homeland Security’s Stop.Think.Connect. The campaign provides teacher toolkits, student lesson plans, and parent guides to help communities address online risks.

These resources offer districts ready-made frameworks to integrate digital safety education into their daily teaching practices.

How Fastvue Helps Schools Meet CIPA and COPPA Requirements

Teaching digital citizenship is important, but it’s only part of the picture. Schools in the United States also carry legal obligations to monitor and protect students online, particularly in the face of rising threats like violent extremism, harmful content, and self-harm risks.

Under the Children’s Internet Protection Act (CIPA), schools receiving E-Rate discounts must actively monitor students’ online activity and block access to obscene or harmful content. At the same time, the Children’s Online Privacy Protection Act (COPPA) requires schools to safeguard personal information for children under 13, ensuring data is not collected or shared without parental consent.

This creates a tricky balancing act. Monitor too much and you risk overstepping privacy boundaries. Monitor too little and you may fall short of federal requirements or miss early warning signs of distress.

Fastvue Reporter helps schools walk that line.

Instead of relying on invasive monitoring tools or device agents, Fastvue works directly with your school’s existing enterprise firewall. It reads the logs already being generated, without installing anything new or collecting additional personal information. If a school has already obtained parental consent for the firewall, there is no need to obtain it again to use Fastvue.

With Fastvue, schools can:

Meet CIPA requirements by turning firewall logs into clear reports that show risky searches, access attempts, and emerging trends across student groups

Respect COPPA by focusing on behavioural indicators that are relevant to policy, without logging every screen or collecting unnecessary student data

Fastvue Reporter is built with privacy by design. It flags the searches and patterns that matter to safeguarding teams and school wellbeing staff. Nothing more, nothing less.

The result is a practical, privacy-respecting approach to compliance that enables schools to act proactively, intervene when necessary, and demonstrate due diligence in digital safety.

Final Thoughts

For US school districts, meeting digital safeguarding duties means more than blanket blocks. It means supporting your students’ digital citizenship and identifying early-warning signs of concern, all while staying compliant with laws such as CIPA and COPPA. Fastvue Reporter turns the firewall you already have into a practical safeguarding system, delivering clear, real-time insights while maintaining student privacy.

Don't take our word for it. Try for yourself.

Download Fastvue Reporter and try it free for 14 days, or schedule a demo and we'll show you how it works.

- Share this storyfacebooktwitterlinkedIn